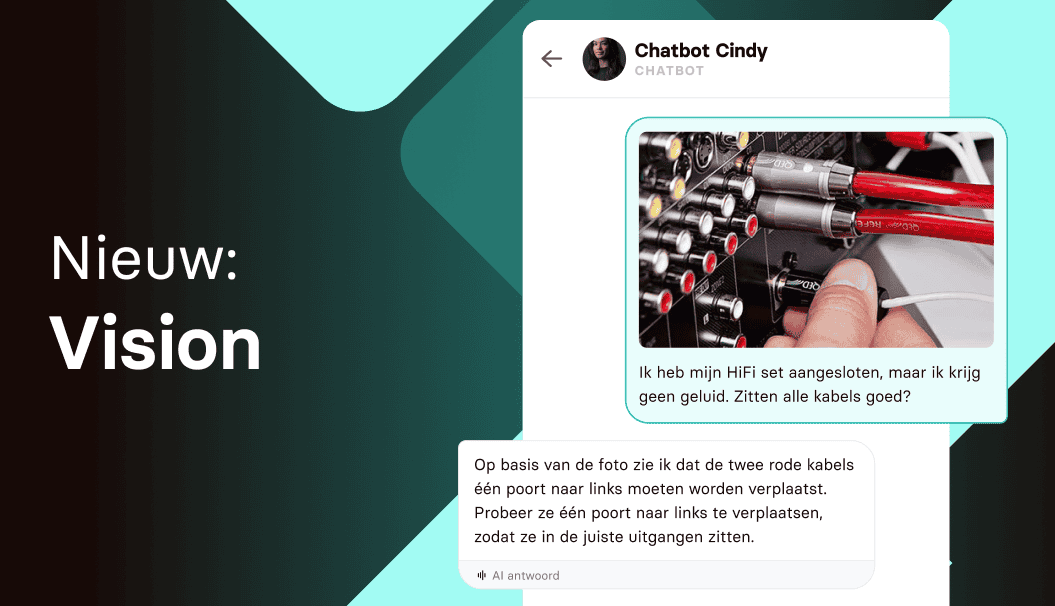

General chatbots have been able to understand images for some time now. Watermelon is now making this possible for AI-powered customer service as well.

Watermelon builds its own offering largely on OpenAI’s GPT-4o. With this model, the AI player relies heavily on improved multimodality compared to previous LLMs. That is, it can interpret text, sound, and images better than ever before and combine that information. Watermelon, which allows companies to build their own GPT-4o-based chatbot, focuses specifically on vision. It allows AI chatbots for customer service to display images of users along with them, with no additional actions required.

Many possibilities

Alexander Wijninga, CEO and founder of Dutch company Watermelon, discusses how vision extends the functionality of AI. “For example, imagine a veterinarian receiving a photo of a spot on a pet’s skin, or a technology company using a photo to determine what type of repair is needed. Through vision, chatbots can now answer more questions when images are critical to getting to a solution.”

Wijninga promises that after uploading a photo, customers will receive an answer to a corresponding question directly from the chatbot, thus taking business customer interaction “to a new level.”

But the current functionality will not remain the same, promises Watermelon. For example, the current version of Vision cannot yet correctly place objects within an image. “A simple example: If you share a picture of a room with a chair with a chatbot and ask where the chair is, the chatbot cannot currently answer that correctly,” explains Wijninga.

Read also: OpenAI once again lowers its prices for the latest model of GPT-4o